Updated almost 8 years ago by Jessie Lee

curl http(s)://web.sitecurl -I http(s)://web.site

#

#

#

#

#

#

#

#

#

#

#

#

#

#

#

#

#

#

#

#

#

#

#

#

#

#

#

#

#

#

#

#

#

#

#

#

#

#

#

#

#

#

#

#

#

#

#

#

#

#

#

#

#

#

#

#

#

#

#

#

#

#

#

#

#Pre-requisites: DNS access

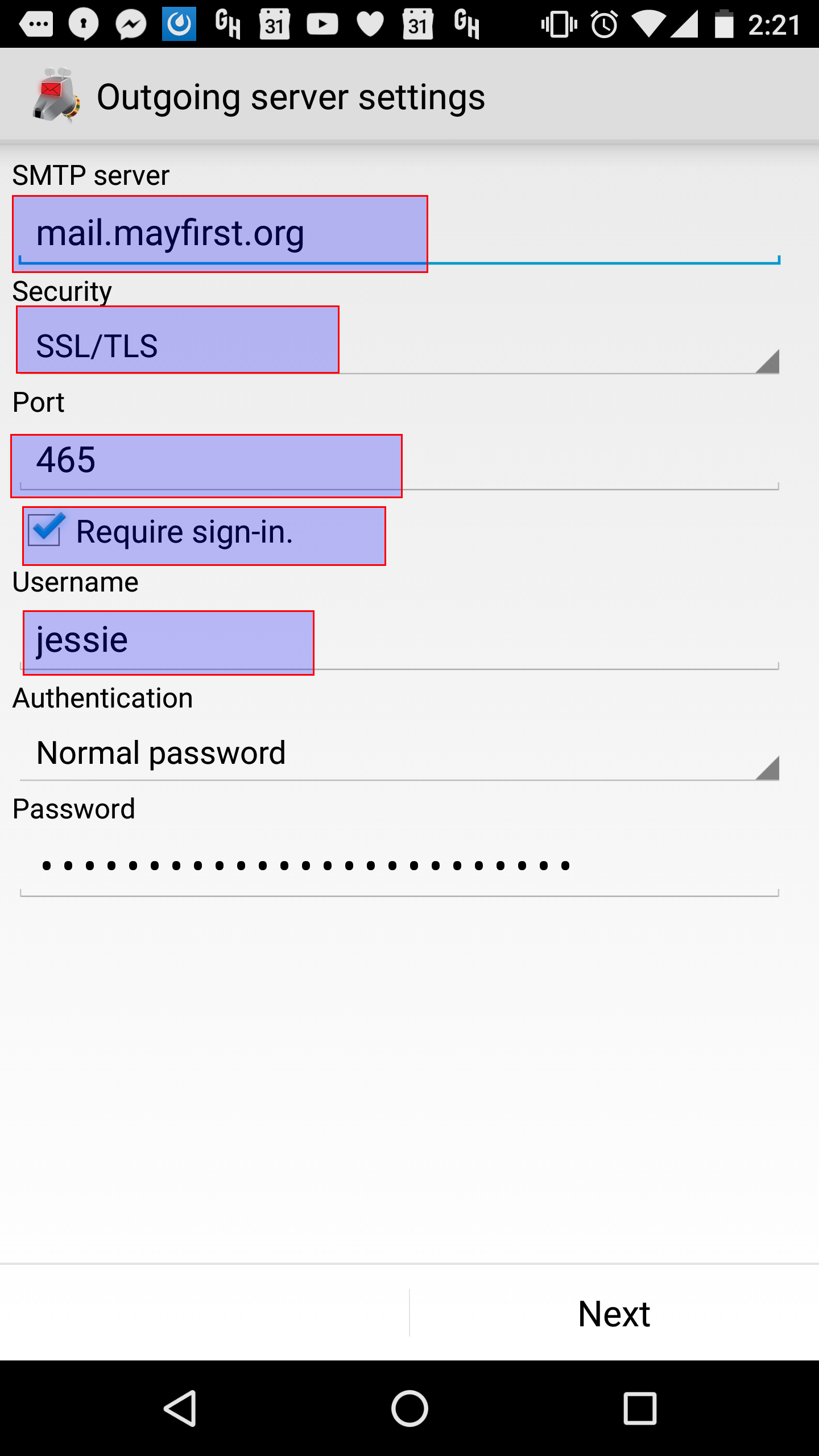

You now have two options for SMTP setup:

ssl:// and select a port of 465.See also here: http://docs.aws.amazon.com/ses/latest/DeveloperGuide/postfix.html

Postfix will give higher performance on large mailings, and also does a much better job of handling resends, greylisting, etc. This should be implemented wherever possible.

/etc/postfix/main.cf:#jon@palantetech.coop SES setup relayhost = email-smtp.us-west-1.amazonaws.com:25 smtp_sasl_auth_enable = yes smtp_sasl_security_options = noanonymous smtp_sasl_password_maps = hash:/etc/postfix/sasl_passwd smtp_use_tls = yes smtp_tls_security_level = encrypt smtp_tls_note_starttls_offer = yes #This last line isn't needed, I'm pretty sure #smtp_tls_CAfile = /etc/ssl/certs/ca-bundle.crt #jon@palantetech.coop rate limiting to 4 messages/second for now smtp_destination_rate_delay = 1s smtp_destination_concurrency_limit = 4

/etc/postfix/sasl_passwd in the format:email-smtp.us-east-1.amazonaws.com:25 username:password

postmap /etc/postfix/sasl_passwd.There's code here to use a Amazon SNS to a bounce processing address instead of the typical bounce processing here. I have NOT tested this, but I don't think this provides an advantage over typical bounce processing. An SNS endpoint in CiviCRM COULD provide higher performance than IMAP/POP-based bounce processing, I don't think this solution is it.

So instead, just see Set up local bounce processing.

In testing if CiviCRM reports that everything's been sent correctly, but then no emails are received, the problem could be with Postfix authenticating to SES. /var/log/mail.log might contain entries like this.

Aug 9 11:37:48 XXXXXXXX postfix/smtpd[24665]: connect from localhost[127.0.0.1] Aug 9 11:37:48 XXXXXXXX postfix/smtpd[24665]: A30A4624F1: client=localhost[127.0.0.1] Aug 9 11:37:48 XXXXXXXX postfix/cleanup[24669]: A30A4624F1: message-id=<20160809163748.A30A4624F1@XXXXXXXX.example.org> Aug 9 11:37:48 XXXXXXXX postfix/qmgr[24664]: A30A4624F1: from=<XXXXXXXX@example.org>, size=453, nrcpt=1 (queue active) Aug 9 11:37:48 XXXXXXXX postfix/smtpd[24665]: disconnect from localhost[127.0.0.1] Aug 9 11:37:48 XXXXXXXX postfix/smtp[24670]: warning: SASL authentication failure: No worthy mechs found Aug 9 11:37:48 XXXXXXXX postfix/smtp[24670]: A30A4624F1: SASL authentication failed; cannot authenticate to server email-smtp.us-west-2.amazonaws.com[54.68.106.242]: no mechanism available Aug 9 11:37:48 XXXXXXXX postfix/smtp[24670]: warning: SASL authentication failure: No worthy mechs found Aug 9 11:37:48 XXXXXXXX postfix/smtp[24670]: A30A4624F1: SASL authentication failed; cannot authenticate to server email-smtp.us-west-2.amazonaws.com[52.35.58.187]: no mechanism available Aug 9 11:37:49 XXXXXXXX postfix/smtp[24670]: warning: SASL authentication failure: No worthy mechs found Aug 9 11:37:49 XXXXXXXX postfix/smtp[24670]: A30A4624F1: to=<XXXXXXXX@example.com>, relay=email-smtp.us-west-2.amazonaws.com[52.35.228.26]:25, delay=0.39, delays=0.04/0.01/0.34/0, dsn=4.7.0, status=deferred (SASL authentication failed; cannot authenticate to server email-smtp.us-west-2.amazonaws.com[52.35.228.26]: no mechanism available)

If that's the case, you might need to install some additional libraries, namely the libsasl2-modules package in Debian/Ubuntu systems.

EC2 severely throttles outgoing mail on ports 25/465/587. You need to submit a request to Amazon to have this throttle lifted. This is separate from any user-configurable firewalling! You can do that (and set up PTR) here: https://portal.aws.amazon.com/gp/aws/html-forms-controller/contactus/ec2-email-limit-rdns-request

Modules to be installed

OpenLayers javascript libraries installed in sites/all/libraries.

The data format for mapping with OpenLayers in Drupal is handled by content types with particular data type fields associated with those content types. We've covered two field data types, which allow us to map points, more specifically longitude and latitude. Those fields are Postal Address field and Geofield.

If we use postal address field, we also have to use geofield. First add the postal address field; there's only a single widget option, Dynamic address form. In the configuration, set this field to be required, filter to particular countries if that's needed for data entry. The rest of the defaults should be fine. Then add a geofield to the content type in order to transform the entered address into a longitude and latitude. The widget for this field needs to be set to Geocode from another field. Set this field to be required, choose the address field as the Geocode from field that will be transformed into geolocation data, and finally choose the geocoding service. We used Google during the demo, but there are privacy issues to be discussed around actual data. When entering data, only the address field appears on the edit form, but when viewing the entered content the longitude and latitude appear as well.

If we use just the geofield, there are several options. We've covered the map-based data entry option. When adding the geofield to the content type, select Openlayers Map as the widget. Set the field to be required and adjust the default map center and zoom for data entry if that's needed. The rest of the defaults should be fine to accept. When editing the content, the map appears for the user to enter the data, but only the longitude and latitude appear when viewing it.

The geofield supports other types of data, such as direct input of longitude and latitude and two dimensional shapes.

With some data now entered as content in Drupal, we need to create a map. Creating a map with OpenLayers in Drupal requires three componentsThe map should now be rendered at the path given in the last step.

This is documentation and scripts for using Borg backups with backupninja. This relies heavily on the work of Jon Goldberg, Benzhaomin, and Guillaume Subiron.

From the Borg docs:

BorgBackup (short: Borg) is a deduplicating backup program. Optionally, it supports compression and authenticated encryption.

The main goal of Borg is to provide an efficient and secure way to backup data. The data deduplication technique used makes Borg suitable for daily backups since only changes are stored. The authenticated encryption technique makes it suitable for backups to not fully trusted targets.

Main borg docs: https://borgbackup.readthedocs.io/en/stable/

List backups

borg list /path/to/repo

Mount a single backup

borg mount /path/to/repo::individual_backup /tmp/mymountpoint

Check viability of the repo

borg check -v /path/to/repo

For help fixing a repo that fails borg check see this example from work on VCW osTicket.

If the backup has a passphrase, you'll need to enter it to do any of those commands. The passphrase should be in the client credentials, and will be in the /etc/backup.d/ jobs

Install necessary packages, currently the best way to do that is by using jessie-backports.

aptitude -t jessie-backports install python3-msgpack borgbackup

The files borg, borglocal, borg.helper, and borglocal.helper should be placed in /usr/share/backupninja, permissions 644, owned by root:root.

cd /usr/share/backupninja wget https://redmine.palantetech.coop/attachments/download/7133/borg && wget https://redmine.palantetech.coop/attachments/download/7135/borg.helper && wget https://redmine.palantetech.coop/attachments/download/7136/borglocal && wget https://redmine.palantetech.coop/attachments/download/7134/borglocal.helper chown root:root borg* && chmod 644 borg*

The files 70-76 are example backupninja jobs, which would go in /etc/backup.d, permissions 600, owned by root:root.

cd /etc/backup.d wget https://redmine.palantetech.coop/attachments/download/7130/70-local.borglocal wget https://redmine.palantetech.coop/attachments/download/7132/71-mfpl.borg wget https://redmine.palantetech.coop/attachments/download/7138/75-borglocalbackupcheck.sh wget https://redmine.palantetech.coop/attachments/download/7137/76-borgbackupcheck.sh chown root:root 7* && chmod 600 7*

Job 70 is for backing up to a usb drive, which will be mounted at the beginning of every job and unmounted at the end.

Necessary variables to change: device uuid, filesystem type, included and excluded files, directory to mount the usb drive to, passphrase

Job 71 is for backing up to either a local folder or a folder accessible by ssh on a remote host.

Necessary variables to change: included and excluded files, directory to put the backups in, host, ssh user, passphrase

Jobs 75 and 76 are for checking the viability of the job 70 and 71 backups respectively, and require coping over the variables from those jobs.

Please change the passphrase variable in these jobs to something other than "PASSPHRASE", or leave it empty, which will turn off encryption.

There is a version of the borg handler now included in newer versions of backupninja.

That handler requires two changes to job 71:

documentation:

https://0xacab.org/riseuplabs/backupninja/merge_requests/1

https://labs.riseup.net/code/projects/backupninja

https://borgbackup.readthedocs.io/en/stable/

Find copies of our bylaws here in both PDF and DOC format.

Updated over 10 years ago by Jon Goldberg

Your website and/or CiviCRM database must be placed on a computer that's always connected to the Internet in order to be accessible to your visitors and/or staff. While theoretically you can take an unused computer in your office and put the site on it, this has multiple drawbacks. First, if your office's Internet connection goes out, the site becomes unavailable to people outside your office. Second, you take on the responsibility of maintaining the hardware - if the computer breaks, your site is offline until you can fix it.

For these reasons, unless your organization is in a position to support these issues, it's recommended to lease space from a "web hosting company", aka a "webhost". The webhost will maintain the hardware and Internet connection for your site. For small organizations, your choices fall into three categories: Shared hosting, Virtual Private Servers, and Platform-as-a-Service.

Here are the shared webhosts our cients oten use: Shared Webhost options

With a VPS, you're given a private server with a guaranteed level of resources. Because it's your own server, you can set it up as you need, and because your resources are guaranteed, you aren't affected by the activities of others. VPS price is determined by the amount of resources you get, and also whether you pay the webhost to maintain the server for you. You can also purchase VPS management services from Palante.

Some clients on a very low budget will lease an unmanaged VPS, but only maintain it during emergencies. While a bit risky, several of our clients have gone years with this approach successfully.

Here are some VPS companies Palante uses: VPS webhost options

With PaaS, you can hire a company to manage not just the server, but the application running on the server - for instance, WPEngine for Wordpress installations, or Pantheon for Drupal and Wordpress. These choices tend to be a bit more expensive than general hosting, but can be helpful if you expect to see spikes in demand, since these platforms will automatically allocate (and charge for) resources as they are needed.

Updated over 7 years ago by Morgan Robinson

Disabling the requirement for CVV (credit card security code)

Payment Processor Test Cards

Exporting Raiser's Edge for CiviCRM

Exporting Salsa for CiviCRM

Exporting Filemaker for CiviCRM

Fundraising Reports

Overrides for dev/staging sites

Amazon SES for CiviCRM

Constant Contact sync for CiviCRM

Set up local bounce processing

Topics for "CiviCRM 102" training

CiviCRM launch checklist

CiviCRM multi-site checklist

Shared folders for migration

Migrating CiviCRM to a new site

PayPal

Updated almost 10 years ago by Jon Goldberg

This is the documentation for the CiviCRM Kettle Transforms available on Github here.

Updated over 10 years ago by Jon Goldberg

Protip: Did you remember to comment out CIVICRM_DOMAIN_ID and CIVICRM_UF_BASEURL?

If you're using a "true" multi-site, where each site has its own civicrm.settings.php, the documentation on the CiviCRM wiki will suffice. Even if you're using Wordpress or Domain Access, there's good documentation there (I know, I wrote most of it).

The trick is to write code that can determine your domain based on the URL. Here is an example of how to do that using Domain Access or Wordpress with subdomain multi-site:

switch ($_SERVER['SERVER_NAME']) {

case 'www.xxx.org':

case 'xxx.org':

define( 'CIVICRM_DOMAIN_ID', 1 );

define( 'CIVICRM_DOMAIN_GROUP_ID', 2);

define( 'CIVICRM_DOMAIN_ORG_ID', 105383);

define( 'CIVICRM_UF_BASEURL' , 'http://www.xxx.org/' );

$civicrm_setting['URL Preferences']['userFrameworkResourceURL'] = 'http://www.xxx.org/sites/all/modules/civicrm';

break;

case 'cdp.xxx.org' :

define( 'CIVICRM_DOMAIN_ID', 2 );

define( 'CIVICRM_DOMAIN_GROUP_ID', 19);

define( 'CIVICRM_DOMAIN_ORG_ID', 106726);

define( 'CIVICRM_UF_BASEURL' , 'http://cdp.xxx.org/' );

$civicrm_setting['URL Preferences']['userFrameworkResourceURL'] = 'http://cdp.xxx.org/sites/all/modules/civicrm';

break;

// etc.

}

Wordpress with subfolder multi-site is a little trickier, here's what I've got. Note that this allows you to set a POST variable when running cron (via wget) to specify the correct site:

$multi_site_path = explode("/", $_SERVER[REQUEST_URI]);

if ($multi_site_path[6] == "cron.php") {

$multi_site_choice = $_POST["site"];

} else {

$multi_site_choice = $multi_site_path[1];

}

switch ($multi_site_choice) {

case 'wp-admin':

case '':

case false:

define( 'CIVICRM_DOMAIN_ID', 1 );

define( 'CIVICRM_DOMAIN_GROUP_ID', 84);

define( 'CIVICRM_DOMAIN_ORG_ID', 1);

define( 'CIVICRM_UF_BASEURL' , 'http://www.yyy.org/' );

break;

case 'hcnmd':

define( 'CIVICRM_DOMAIN_ID', 2 );

define( 'CIVICRM_DOMAIN_GROUP_ID', 74);

define( 'CIVICRM_DOMAIN_ORG_ID', 67459);

define( 'CIVICRM_UF_BASEURL' , 'http://www.yyy.org/hcnmd' );

break;

// etc.

}

You need to run cron separately for each site to run its scheduled jobs. Here's a good example of how to set it up.

0,15,30,45 * * * * /usr/bin/wget --config=/home/members/xxx/sites/xxx.org/users/xxx/xxx.org/include/civicrm-wgetrc http://www.xxx.org/wp-content/plugins/civicrm/civicrm/bin/cron.php 1,16,31,46 * * * * /usr/bin/wget --config=/home/members/xxx/sites/xxx.org/users/xxx/xxx.org/include/civicrm-wgetrc-site2 http://www.xxx.org/wp-content/plugins/civicrm/civicrm/bin/cron.php 2,17,32,47 * * * * /usr/bin/wget --config=/home/members/xxx/sites/xxx.org/users/xxx/xxx.org/include/civicrm-wgetrc-site3 http://www.xxx.org/wp-content/plugins/civicrm/civicrm/bin/cron.php 3,18,33,48 * * * * /usr/bin/wget --config=/home/members/xxx/sites/xxx.org/users/xxx/xxx.org/include/civicrm-wgetrc-site4 http://www.xxx.org/wp-content/plugins/civicrm/civicrm/bin/cron.php 4,19,34,49 * * * * /usr/bin/wget --config=/home/members/xxx/sites/xxx.org/users/xxx/xxx.org/include/civicrm-wgetrc-site5 http://www.xxx.org/wp-content/plugins/civicrm/civicrm/bin/cron.php 5,20,35,50 * * * * /usr/bin/wget --config=/home/members/xxx/sites/xxx.org/users/xxx/xxx.org/include/civicrm-wgetrc-site6 http://www.xxx.org/wp-content/plugins/civicrm/civicrm/bin/cron.php

Note that each civicrm-wgetrc file has the "site" set differently in the post-data. E.g.:

post-data=name=civicron&pass=<redacted>&key=<redacted>&site=site5 output_document = - quiet=on timeout=1

Updated over 10 years ago by Jon Goldberg

User stories are short narrative examples, 3-4 sentences long, of a typical example of how you intend to use a CRM database. Here are some examples.

Updated almost 8 years ago by Jessie Lee

mysqld_safe --skip-grant-tables UPDATE mysql.user SET Password=PASSWORD('MyNewPass') WHERE User='root';

FLUSH PRIVILEGES;Maintaining projects with compass-based themes requires the ability to edit SCSS files and to recompile them to CSS. Be aware that any changes to CSS files (compiled output) will be lost when the stylesheets are recompiled, so you should always make changes to the SCSS files.

Compass is a tool for managing Sass stylesheet projects. Sass is a language that compiles to CSS and also the name of the compiler. Compass makes working with Sass outside of a Ruby project much easier, however it is entirely possible to work with a Compass project using just the Sass compiler. But truly it's not worth the trouble! You likely won't encounter a situation where you have sass but not compass and no permission to install compass.

sudo apt-get install rubygems sudo gem install compass --pre

This installs all dependencies including Sass.

Our projects tend to use additional compass plugins, which are installed as Ruby gems. Here are the set I commonly rely on:

sudo gem install compass-rgbapng survivalkit foundation

If one of these things is missing, you will be told in a very straightforward way when you try to compile a project that something is missing:

benjamin@dev1:/var/www/dev/nlg/sites/all/themes/prudence$ /var/lib/gems/1.8/bin/compass compile LoadError on line 31 of /usr/lib/ruby/1.8/rubygems/custom_require.rb: no such file to load -- survivalkit Run with --trace to see the full backtrace

The proper response to that is:

sudo gem install survivalkit

I'm referring to SCSS which is a syntax of Sass that closely resembles CSS. From Wikipedia

Sass consists of two syntaxes. The original syntax, called "the indented syntax" uses a syntax similar to Haml. It uses indentation to separate code blocks and newline characters to separate rules. The newer syntax, "SCSS" uses block formatting like that of CSS. It uses braces to denote code blocks and semicolons to separate lines within a block. The indented syntax and SCSS files are traditionally given the extensions .sass and .scss respectively.

None of our projects use the Sass syntax. Both variants are fully supported by Compass and Sass.

Compass-based themes are compass projects. You can identify them by the "config.rb" file in the theme directory. This config.rb specifies the location of SCSS source files and the destination of compiled output. In almost every case, "css" is the name of the output directory and "sass" is the name of the source directory.

These directories are relative to the theme directory itself. If there's any confusion, view the config.rb file to find the exact names of the directories.

# Location of the theme's resources. css_dir = "css" sass_dir = "sass" extensions_dir = "sass-extensions" images_dir = "images" javascripts_dir = "js" fonts_dir = "fonts"

In the "sass" directory, you will see the source files that end with "scss." Some of these files are prefixed with "_" which identifies them as "partials." This is a Ruby concept that appears throughout the ruby ecosystem. For sass, this simply means:

If you have a SCSS or Sass file that you want to import but don’t want to compile to a CSS file, you can add an underscore to the beginning of the filename. This will tell Sass not to compile it to a normal CSS file. You can then import these files without using the underscore.

From the root of the compass project (the Drupal theme), issue this command:

/var/lib/gems/1.8/bin/compass compile

The SCSS syntax allows you to use the same CSS rules you would normally use and they will be passed through to the compiled output. The main trouble you will find is finding the place to edit.

If you cannot find the place to make your correction, it's possible that the CSS you want to change is actually being generated by a mixin or a partial, which means the specific code you want to edit is not going to be in the source file with a similar filename to the compiled output.

In this case, you will need to enable line comments and debugging in Compass. In the config.rb file look for this statement:

line_comments = false

And change it to "true."

Then recompile the output and you will see things like this:

/* line 62, ../../../../../../../../lib/gems/1.8/gems/compass-0.13.alpha.4/frameworks/compass/stylesheets/compass/typography/lists/_horizontal-list.scss */

#social-media-links .pane-content ul li {

list-style-image: none;

list-style-type: none;

margin-left: 0;

white-space: nowrap;

float: left;

display: inline;

padding-left: 4px;

padding-right: 4px;

}

This indicates that this CSS block is generated using code from the horizontal list mixin from Compass. That's not something you should necessarily edit, but you can read the documentation on that mixin to understand why it's being used.

/* line 86, ../sass/_custom.scss */

.section-member #content .burr-flipped-content-inner a {

color: black;

}

This indicates that the code is being generated based on something in line 86 of _custom.scss, a partial file in this project, which you can edit.

It is not recommended to leave debugging enabled when committing your compiled output! It creates a lot of "diff noise" that doesn't belong in the git repository. When you've debugged your code, disable the line comments and re-compile before committing.

This Conflict Resolution Sheet is based upon work of AORTA and The Icarus Project. Our collective is going to start using this as a tool to help us deal with conflicts and have better communication. Each member fills out this sheet, and we store that information in our online documentation, enabling us to start conversations about difficult issues in ways that work better for us as individuals.

(ex. email me individually beforehand, create a discussion ticket beforehand, call me individually, chat with me)

(ex. bring it up for the first time in an in person group meeting, chat with me, call me)

(ex. interrupting others, trying to close the conversation prematurely, avoiding talking about it, raising my voice, being silent)

(ex. take time away from the issue, take a walk, eat or drink)

(ex. break down the issue into smaller parts, use formal consensus process, propose putting off making a decision)

(ex. phone calls, taking a walk, talking someone down)

Updated over 9 years ago by Jon Goldberg

This is documentation for this extension: https://github.com/cividesk/com.cividesk.sync.constantcontact. I'm not getting paid to fix the bugs mentioned here or ensure the accuracy of what I'm saying, but I took these notes and I'm posting them publicly in case anyone else benefits from them.

$last_sync = CRM_Utils_Array::value('last_sync', $settings, '2000-01-01 00:00:00');

to this:

$last_sync = '2000-01-01 00:00:00';

OpenLayers makes it possible to style the data layers of our maps. This could be helpful for visual accuracy of data represented as well as distinguishing between data on separate layers but rendered together on a single map.

To create a new style go to the Styles tab on the OpenLayers administration section. This lists all the existing styles available. Click to Add a new one or Edit an existing one. Give the style a distinguishing administrative name, machine name and description if adding a new one. All the options available have help text below each field which describe what each setting does. A few notes:

The best course when first creating styles is trial and error to get familiar with each option.

See https://devsummit.aspirationtech.org/index.php?title=Data_Munging for canonical notes which Jon plans to edit for accuracy.

Updated over 2 years ago by Jamila Khan

Check if any sources still point to stretch

cd /etc/apt grep -nr stretch .

If so, bring those up to buster and run updates first

https://redmine.palantetech.coop/projects/commons/wiki/Debian_9_to_10

Check to make sure kernel metapackage is installed, not just specific kernel

dpkg -l "linux-image*" | grep ^ii | grep -i meta should have results

If not, install metapackage

https://www.debian.org/releases/bullseye/amd64/release-notes/ch-upgrading.en.html#kernel-metapackage

List and purge removed packages with config files remaining

https://www.debian.org/releases/bullseye/amd64/release-notes/ch-upgrading.en.html#purge-removed-packages

aptitude search '~c' aptitude purge '~c'

These commands should have no results

aptitude search "~ahold" dpkg --get-selections | grep 'hold$'

Check which sources exist that point to buster

cd /etc/apt grep -nr buster .

Edit the main list, and any others that come up

vim /etc/apt/sources.list

replace buster with bullseye

:%s/buster/bullseye/g

replace bullseye/updates with bullseye-security

:%s/bullseye\/updates/bullseye-security/g

https://www.debian.org/releases/bullseye/amd64/release-notes/ch-information.en.html#security-archive

apt-get update

If you get a NO_PUBKEY error, see https://redmine.palantetech.coop/projects/commons/wiki/Debian_10_to_11#Common-problems

apt-get -o APT::Get::Trivial-Only=true dist-upgrade

apt-get upgrade

apt-get dist-upgrade

| package | change configs |

| nrpe | no |

| sudoers | no |

| journald | no |

| backupninja | no |

| nginx | yes but recheck after |

| redis | yes but recheck after |

| sshd_config | yes but recheck after |

| glibc | yes |

| logrotate.d/apache2 | yes |

mysql_upgrade

cat /var/log/apt/history.log | grep RemoveW: GPG error: https://apt.postgresql.org/pub/repos/apt bullseye-pgdg InRelease: The following signatures couldn't be verified because the public key is not available: NO_PUBKEY 7FCC7D46ACCC4CF8

0 meat:/etc/apt# sudo gpg -a --export 7FCC7D46ACCC4CF8 | sudo apt-key add -

After update, backupninja likely needs to be patched

https://redmine.palantetech.coop/projects/pt/wiki/Icinga2#Patch-the-backupninja-binary

Borg backup jobs to May First need to have port = 2201 added to the destination section.

Updated 3 months ago by Hannah Siwiec

If the server is running on linode, check that image based backups are enabled / working. Take a snapshot before running the upgrade procedure!

Check if any sources still point to buster

cd /etc/apt grep -nr buster .

If so, bring those up to bullseye and run updates first

https://redmine.palantetech.coop/projects/commons/wiki/Debian_10_to_11

Check to make sure kernel metapackage is installed, not just specific kernel

dpkg -l "linux-image*" | grep ^ii | grep -i meta

should have results

If not, install metapackage

https://www.debian.org/releases/bookworm/amd64/release-notes/ch-upgrading.en.html#kernel-metapackage

List and purge removed packages with config files remaining

https://www.debian.org/releases/bookworm/amd64/release-notes/ch-upgrading.en.html#purge-removed-packages

aptitude search '~c' aptitude purge '~c'

These commands should have no results

aptitude search "~ahold" dpkg --get-selections | grep 'hold$'

Check which sources exist that point to bullseye

cd /etc/apt grep -nr bullseye .

Edit the main list, and any others that come up

vim /etc/apt/sources.list

replace bullseye with bookworm

:%s/bullseye/bookworm/g

Good to check but should be done if upgraded from Debian 10 to 11

replace bullseye/updates with bookworm-security

:%s/bookworm\/updates/bookworm-security/g

https://www.debian.org/releases/bookworm/amd64/release-notes/ch-information.en.html#security-archive

apt-get update

apt-get -o APT::Get::Trivial-Only=true dist-upgrade

apt-get upgradeif it asks whether to change /etc/apt/trusted.gpg.d/debian-archive-jessie-security-automatic.gpg, say yes

apt-get dist-upgradeChange configs during full upgrade (install the package maintainers version)

| package | change configs |

| nrpe | no |

| sudoers | no |

| journald | no |

| backupninja | no |

| nginx | yes but recheck after |

| redis | yes but recheck after |

| sshd_config | yes but recheck after |

| glibc | yes |

| logrotate.d/apache2 | yes |

| grub | no |

mysql_upgrade

if a web server, make sure websites are up

if an infrastructure server, test and make sure all parts of the infrastructure are working properly

if a PTC server check the recovery plan entry for that server to make sure everything has recovered

cat /var/log/apt/history.log | grep Remove

When issues are encountered they should be listed here to ease future troubleshooting!

After update, backupninja likely needs to be patched

https://redmine.palantetech.coop/projects/pt/wiki/Icinga2#Patch-the-backupninja-binary

Borg backup jobs to May First need to have port = 2201 added to the destination section.

Updated about 7 years ago by Jack Aponte

vim /etc/apt/sources.list

:%s/jessie/stretch/g

apt-get -o APT::Get::Trivial-Only=true dist-upgrade

apt-get update

apt-get upgrade

apt-get dist-upgrade

mysql_upgrade

PT ticket #35194-19

header.pl and constants.pm are in the ibackup directory but perl on debian does not look in the local directory for @INC.

to fix copy both header.pl and Constants.pm into /etc/perl/

Until backupninja gets the fixes pushed out to the Debian repo (PT ticket #35353), errors like these may be reported by backupninja:

CRITICAL - 38 errors or warnings

May 27 01:00:03 Warning: The partition table for /dev/ram0 could not be saved.

May 27 01:00:03 Warning: The partition table for /dev/ram1 could not be saved.

May 27 01:00:03 Warning: The partition table for /dev/ram2 could not be saved.

Resolution: add partitions = no to job 15/in 15-info.sys.

if Samba is in AD-DC mode, it and winbind fail.

do these commands, then try to run the upgrade again

systemctl stop smbd nmbd winbind systemctl disable smbd nmbd winbind systemctl unmask samba-ad-dc systemctl start samba-ad-dc systemctl enable samba-ad-dc

https://lists.samba.org/archive/samba/2017-July/209972.html

PHP5 (and Ruby 2.1) are not removed by default and will still be used if loaded!

If there is no need for these things to exist remove them with apt-get uninstall.

https://www.howtoforge.com/tutorial/how-to-upgrade-debian-8-jessie-to-9-stretch/

Updated over 5 years ago by Jamila Khan

check which sources exist that point to stretch

cd /etc/apt grep -nr stretch .

Edit the main list, and any others that come up

vim /etc/apt/sources.list

:%s/stretch/buster/g

apt-get -o APT::Get::Trivial-Only=true dist-upgrade

apt-get update

apt-get upgrade

apt-get dist-upgrade

mysql_upgrade

cat /var/log/apt/history.log | grep Removeencryption = none just above the passphraseNewest version of mariadb doesn't have separate databases for information schema or performance schema, so remove the old backups of those.

cd /var/backups/mysql/sqldump rm information_schema.sql.gz rm performance_schema.sql.gz

Need to re-replace the backupninja binary in /usr/sbin/backupninja https://redmine.palantetech.coop/projects/pt/wiki/Icinga2#Backupninja-monitoring

If using a script to check bang via wp, make sure the script uses the full path to /usr/local/bin/wp

First create databases on local environment.

mysqladmin -u root create PROJECT_drupal mysqladmin -u root create PROJECT_civicrm

Then create your project Drupal root and install Drupal.

cd ~/workspace/PROJECT drush --yes site-install -y --db-url=mysql://root@localhost/PROJECT_drupal

Create a Drupal alias of this project.

cd sites/default drush sa --with-optional --with-db --full --alias-name=@local @self >> ~/.drush/PROJECT.aliases.drushrc.php

Log in to remote live host and create a new alias description for the live environment.

cd PROJECT DRUPAL ROOT cd sites/default drush sa --with-optional --with-db --full --alias-name=@live @self

Use these commands on localhost to synchronize.

drush --yes sql-sync @PROJECT.live @PROJECT.local drush --yes rsync @PROJECT.live:%files @PROJECT.local:%files drush @PROJECT.live civicrm-sql-dump | drush @PROJECT.local civicrm-sql-cli

One Drupal installation can support many separate sites through Drupal's multi-site functionality. We want you to use this multi-site set up because it imposes some constraints on your development environment that will ensure that your work will migrate to other environments without a hitch.

Developing in a multi-site environment immediately shakes out a common class of problems related to paths and URLs. If the site you're developing is hosted in sites/mysite but in other environments it's hosted in sites/myhost.com, you'll have to use url() and drupal_get_path() functions to reliably load PHP include files or theme assets across all of your environments.

Drupal-specific deployment systems (AEgir) rely on sites' ability to deploy into a multi-site environment.

Finally, the development environment you build will be one that suits your skills and needs. There are minimums you need to accomplish, but we will indicate when you can stop if you're satisfied for now. If you want to build a more sophisticated, complex and automated development environment, keep following along.

Relying on a multisite-oriented environment enforces another good Drupal developer practice: don't hack core. If your Drupal projects share a common Drupal core, any core hacks you make will also be shared by all of these other projects. If a core hack is good enough for multiple sites, it's good enough to submit as a patch to core!

At the same time, it's justified to make some patches to core just for your development environment. For example, see the section about Xdebug.

Even though Devel module will override core's minified version of jQuery with an uncompressed copy, serious jQuery debugging will require that all of your scripts be uncompressed, which means you will need to replace jQuery UI with an uncompressed version.

Multisite : Each Drupal platform (core and any install profiles) will support

Uniformity : We explain how to set up a development environment which is uniform regardless of your preferred operating system. Only installation and upgrades are different depending on your operating system. Configuration and usage are consistent regardless of whether you use Windows, Mac OS X or Linux.

Maintenance : The development environment will be set up so that all of your web development projects are stored in the same part of your file system: a directory named workspace.

TODO: Create a directory inside your home directory named “workspace”

This development environment is directly inspired by the “sandbox” design used by CivicActions. This was developed by Owen Barton, Fen Labalme and the staff of OpenWall. The same principles for organizing the development environment are used for easily deploying sites in staging, testing and production environments by CivicActions.

Drubuntu also inherits from this design, and it's highly recommended for users who are already using Ubuntu 10.10.

You need to create a LAMP stack on your computer. LAMP is an acronym for Linux, Apache HTTP Server, MySQL and Perl/PHP/Python. Most Drupal sites are deployed on this software.

Drupal can run in other server environments and other platforms. Obviously, we are not going to insist that you switch your personal operating system to Linux just to develop Drupal sites, so Windows and Mac can be substituted for Linux. Solaris and FreeBSD are also suitable platforms for Drupal, but if you are developing sites in this environment, you will need to translate the Linux instructions using your own know-how. On Windows, IIS is supported, but it's not recommended. Drupal 7 supports MySQL, PostgreSQL and SQLite, but most Drupal sites use MySQL and there's a tremendous amount of documentation, GUI client software and support for MySQL. We're going to omit Perl and Python, because Drupal only needs PHP. You will still be able to install Perl or Python if you need it.

The main differences about configuring your development environment are the location of configuration files and the default settings in those configuration files. Use the table to identify the configuration files you may need to edit. If your files are located in another spot, note this because you'll need this information in the future.

| MAMP | Homebrew | Windows | Ubuntu Linux | |

| http.conf | /Applications/MAMP/conf/apache/httpd.conf | /private/etc/apache2/httpd.conf | /etc/apache2/httpd.conf | |

| my.cnf | /Applications/MAMP/db/mysql/my.cnf | /usr/local/var/mysql | /var/lib/db/mysql | |

| php.ini | /Applications/MAMP/conf/php5.3/php.inii | /opt/local/etc/php5/php.ini | /etc/php5/conf.d/php.ini |

Some of us use version control to track changes and maintain a backup history for our configuration files

For Windows, we will use WampServer. http://www.wampserver.com/en/

WampServer 2.1 meets all the requirements for Drupal 7. For earlier versions of Drupal, you will need to also download an older version of PHP to install.

http://en.wikipedia.org/wiki/Comparison_of_WAMPs

WAMP's default httpd.conf needs to have mod rewrite enabled.

Wrap paths in quotation marks.

WAMP already loads configuration files from an aliases directory, so instead of including the file in httpd.conf, copy it to the directory named in the include.

Vhost_alias_module needed.

For Mac, there are two strategies for maintaining a Drupal development environment with their own strengths and weaknesses. Mac OS X could support Drupal on a the default system distributed by Apple Inc. It has Apache HTTPD Server and PHP already installed, and each user has a home directory named “Sites” which is Apache's user home directory. However, it does not have MySQL.

The Macintosh software community has provided two kinds of LAMP stacks: binary application packages and package managers. MAMP is an application package, which you download and install by copying. It is by far the quickest and easiest way to set up a development environment. However, it requires configuration after it has been installed. When you upgrade MAMP, you will need to remember to revisit these instructions so that your development environment does not break.

The alternative and more complicated method relies on a package manager. All package managers require that you install the Mac OS X Developer Tools. These developer tools are distributed on Mac OS X Install DVDs and also downloadable from http://developer.apple.com. We encourage you to customize the installation so that you can exclude documentation or extras you don't want, because the developer tools are very large. The documentation does include UNIX manual pages and Apple Developer documentation for Safari and Mobile Safari and a useful amount of web standards documentation. Likewise, XCode could be an IDE for your Drupal sites, but it's missing some simple features available in free and better tools.

As you improve in your web development skills in Drupal, you will confront the need to compile any number of new tools or servers for your environment. People in the Drupal community use more than just Drupal, and we will introduce you to new things like Compass, Solr, Memcached, Cucumber or a PECL add-ons. We urge you to use a package manager for these other tools because otherwise you risk destroying or disabling your development environment whenever you upgrade Mac OS X. Not every piece of useful web development software is distributed as a compiled binary for Mac OS X.

MAMP from http://www.mamp.info/ is an application that you will copy into your Applications folder. Although it is running system services, it's attaching them to high-number, non-standard ports. MAMP's preferences are user specific, but MAMP may not run correctly if multiple users on the same computer want to use MAMP. It can run concurrently with Mac OS X's own Apache server (the “Web server” controlled by the Sharing preference pane), though you hopefully do not need to run both at the same time.

After copying MAMP to your Applications folder, run it. You will be greeted by the MAMP application window and the MAMP start page in your default web browser as served by MAMP. The start page for MAMP gives you access to phpMyAdmin, various PHP info pages and some documentation. It also shows the default MYSQL credentials.

MAMP runs as the logged in user, and any files it creates (MySQL database files, log files, files uploaded through your Drupal development sites, Imagecache generated files) will be owned by the logged in users. This makes backup very simple and reliable, but it could lead to problems if two users on the same computer want to use MAMP for their own projects.

The MAMP application window allows you to turn on and off the servers, open the start page, and set preferences. By default, MAMP will start and stop all of its services whenever the MAMP application is opened or closed. We like these preferences for MAMP. MAMP currently is distributed with PHP 5.2 and 5.3, and you may need to switch between these PHP versions if you're supporting Drupal 6 or other PHP applications. For Drupal 7, all of MAMP's default settings are appropriate.

Homebrew is a Mac OS X package manager that compiles from source and leverages software already installed as part of Mac OS X. Apache HTTPD Server and PHP 5.3 are already installed on Mac OS X Snow Leopard. Mac OS X Leopard uses PHP 5.2, which is also supported in Drupal 7. The only piece missing is MySQL.

You must install the Mac OS X Developer Tools before attempting to set up a development environment with Homebrew. If you are already suing MacPorts or Fink, you should avoid running both Homebrew and another package manager.

Install Homebrew from http://mxcl.github.com/homebrew/

In the terminal, run “brew install mysql.”

Users of Ubuntu Linux are fortunate to have access to a high-quality LAMP from within their package manager (usually Synaptic). Using the package manager, install these packages and their dependencies:

apt-get install apache2 apache2-mpm-prefork mysql-server curl libapache2-mod-php5 php5-cli php5-common php5-curl php5-dev php5-mcrypt php5-mysql php5-sqlite php5-xdebug php5-xsl php-apc php-pear

Drupal prefers Apache HTTPD Server. Core Drupal features like clean URLs are usually supported with no additional configuration.

Because Apache sometimes runs as a system user (usually www, www-data, apache or httpd), you may need to edit a few configuration files as the superuser or Administrator.

For your environment, Apache virtual host configurations will define at least one Drupal platform in which individual sites will be developed. A Drupal platform is the set of files that constitute Drupal core, any installation profiles, and any shared modules, themes or libraries located in sites/all.

In your workspace, create a new directory named conf.d.

In this directory, create these files. These configuration files define virtual hosts for each Drupal platform in your workspace.

https://github.com/bangpound/webdev-workspace/blob/master/workspace/conf.d/000-default.conf

This sets up some blanket options that apply to every project in your workspace, so that essential URL rewriting and access permissions are set correctly.

https://github.com/bangpound/webdev-workspace/blob/master/workspace/conf.d/drupal-7.conf

This tells Apache about Drupal 7. When you access your development environment's sites through your web browser, you will be navigating a URL that only your computer can access, for example http://new-site.7/. This configuration file tells Apache that any request it receives for a URL where the host name ends with .7, it should serve files from your Drupal 7 site directory.

https://github.com/bangpound/webdev-workspace/blob/master/workspace/conf.d/localhost.conf

This file gives you some flexibility to break out of the rigid structure of this development environment. If you need to engineer on new Drupal platform or maintain a site that for whatever reason does not function in a multisite environment, you can add that project to your workspace. For example, if you want to set up Tattler http://tattlerapp.com/, you can put its directory in your workspace as tattlerapp, and it will be accessed as http://tattlerapp.localhost/.

Typical, current Apache HTTPD installations use a modular configuration file arrangement. This allows us to modify httpd.conf as little as possible, because this may be rewritten in a software upgrade. In your Apache configuration directory /etc/apache2, there is httpd.conf and an extra directory.

In your Apache's httpd.conf, add the following:

bc. #Then create the webdev-workspace.conf file in your extra directory. This simply loads the configuration files from your workspace.

# # Include workspace configurations # Include /PATH/TO/YOUR/workspace/conf.d/*.conf

The hosts file exists on every operating system http://en.wikipedia.org/wiki/Hosts_(file), and it's usually the last place your computer looks to resolve a domain name. The hosts file is what gives your system the name “localhost,” and it's very important that you never remove entries from the file that you didn't put there yourself.

Each project in your development environment needs to be accessible at the root of a unique host name, because this is how your project will likely be deployed. If your project will be deployed in a subdirectory of a larger site or in a user's home directory, you will want to recreate this too so you can catch any path name errors.

Edit /etc/hosts

Add a block comment to indicate where your changes begin and add the first hostname.

bc. #Usually when PHP is installed, it's set up for a development environment. Certain settings are unsuitable for a production environment, so you should make sure you have the right php.ini file installed.

Often, two configuration files come with PHP. php.ini-dist contains settings appropriate for your development environment. php.ini-recommended contains a few changes that hide error messages. The files have only a handful of differences to address the security and performance requirements of development and production environments.

Your development environment exists to show you how bad your work might be, and this is a better plan than letting your production environment show everyone in the world how bad your code actually is!

Verify that your php.ini file is configured with these settings:

bc. display_errors = On

PHP has a customizable error system, which Drupal 7 takes advantage of, which allows applications to handle non-fatal errors themselves and to change settings that affect output and thresholds.

Prior to Drupal 7, release versions of Drupal would not display PHP notice errors (E_NOTICE) and you either had to hack core or use a development release to display these error messages. Notices are useful because they reveal simple but hard to spot mistakes such as using variables that you've not assigned or using ambiguous syntax. http://drupal.org/node/34341

Error reporting may be annoying when the error has no apparent impact on the site's functionality, but notices often appear because of mistakes that are not going to cause fatal errors but which lead to buggy behavior.

MySQL really has no special problems for setting up. Recommendations are to install from a package or build from source using a package manager.

Editing your server's my.conf file to enable innodb_file_per_table to make the InnoDB files smaller and fragmented, which is friendlier to a development environment.

Per CRM-10366, the setting exists, but no form exists for it.

The easiest way to change it is via API explorer: <mysite>/civicrm/api/explorer

Select "Setting" as your entity, and "Create" as your action. Click the "CVV required for backoffice?" link that appears and enter a value of "0".

Updated about 9 years ago by Jack Aponte

This table is a list of Drupal 7 modules that Palante commonly uses in building client sites, accompanied by their current status in Drupal 8 and Backdrop.

| Module | Backdrop status | Backdrop version | D8 status | D8 version |

|---|---|---|---|---|

| adaptivetheme | unported | unported | pre-release | dev |

| addanother | ported | full | unported | none |

| admin_views | core | n/a | core | n/a |

| auto_entitylabel | pre-release | full | in progress | dev |

| auto_nodetitle | ported | full | in progress | none |

| backup_migrate | ported | full | in progress | dev |

| better_formats | ported | full | in progress | dev |

| breakpoints | unported | n/a | core | n/a |

| calendar | in progress | dev | in progress | dev |

| civicrm | in progress | beta | in progress | beta |

| ckeditor_link | unported | n/a | unported | none |

| coffee | ported | full | pre-release | beta |

| comment_notify | ported | full | in progress | dev |

| ctools | core | n/a | core & in progress* | dev |

| date | core | n/a | core | n/a |

| devel | ported | full | pre-release | dev |

| diff | unported | none | pre-release | alpha |

| domain | unported | n/a | in progress | dev |

| entity | core | n/a | core & in progress | alpha |

| features | unported | none | pre-release | beta |

| google_analytics | ported | full | ported | full |

| honeypot | ported | full | ported | full |

| i18n | in progress | dev | core | n/a |

| imagefield_crop | unported | none | unported | none |

| imce | ported | full | ported | full |

| imce_filefield | unported* | none | unported* | none |

| imce_mkdir | unported | none | unported | n/a |

| imce_wysiwyg | core | n/a | unported | n/a |

| libraries | ported | full | in progress | dev |

| login_destination | ported | full | unported | n/a |

| logintoboggan | ported | full | unported | n/a |

| messaging | unported | none | unported* | none |

| metatag | unported* | none | pre-release | beta |

| nagios | unported | none | pre-release | dev |

| navbar | core | n/a | core | n/a |

| notifications | unported | n/a | unported* | none |

| og | unported | n/a | in progress | none |

| omega | unported | unported | pre-release | alpha |

| pathauto | core | full | pre-release | alpha |

| panels | core (layouts) | n/a | pre-release | beta |

| panels_breadcrumbs | unported | none | unported | none |

| picture | unported | none | core | n/a |

| piwik | unported | n/a | ported | full |

| radix_layouts | in progress* | dev | pre-release | rc |

| redirect | ported | full | pre-release | alpha |

| responsive_menus | ported | full | in progress | none |

| rules | in progress | dev | pre-release | alpha |

| search_api | unported | n/a | pre-release | alpha |

| search404 | ported | full | pre-release | alpha |

| service_links | unported | n/a | unported | none |

| sharethis | ported | full | pre-release | beta |

| spamspan | unported* | none | pre-release | dev |

| stage_file_proxy | unported | n/a | in progress | dev |

| strongarm | core | n/a | core | n/a |

| styleguide | ported | dev | unported | n/a |

| submitted_by | ported | dev | unported | n/a |

| token | in progress | dev | pre-release | alpha |

| total_control | unported | none | unported | none |

| views | core | n/a | core | n/a |

| views_bulk_operations* | unported | n/a | in progress | none |

| webform | ported | full | in progress* | none |

| webform_civicrm | unported | none | planned | none |

| wysiwyg | core | n/a | core | n/a |

| wysiwyg_mediaembed | unported* | none | unported | none |

Notes

Also check out these related resources:

backdrop-portUpdated over 9 years ago by Jon Goldberg

Filemaker data is obviously a lot less uniform than data from another CRM, but I'm documenting some tips and tricks here.

Specify in Kettle that input files exported as "Windows (ANSI)" (the default on Windows) are opened as file encoding CP-1252.

Here's a Javascript snippet I use in Kettle to convert them to separation characters:

var Activities; Activities = replace(Activities,'\v', '\x01');

Note that sometimes I'll want to convert them to newlines instead.

Updated about 8 years ago by Jon Goldberg

There are two basic approaches to exporting RE data. There's the built-in export tool, and there's direct SQL interaction. This document will try to cover both approaches where possible. The Export tool has a lower barrier to entry, but a) there's some data you can't export with the tool, and b) the data will be denormalized, requiring additional transformation compared to extracting normalized SQL data.

Note that there's a good video on this topic by Young-Jin from Emphanos, LLC, here: http://sf2013.civicrm.org/migrating-raisers-edge-civicrm

If so, you can use my Raiser's Edge to CiviCRM transforms, available here: https://github.com/PalanteJon/civicrm_kettle_transforms

The Raiser's Edge Export tool is on the left toolbar when you first enter Raiser's Edge.

From the tool, you will create a number of exports. When you first create an export, you'll be asked a number of questions, including Export Type (Constituent, Gift, etc.), a checkbox to include inactive records (check this), and an export file type (select CSV).

For most export, select Constituent as the Export type. This is the "base table" - all records will be joined relative to it.

RE differentiates between constituents and non-constituents in their system. If you create a new contact, they're a constituent - but then you might decide to add a spouse or employer record, which is NOT considered a constituent, and doesn't show up in most queries. Notably, non-constituents aren't exported when using the Export tool and your base table is "Constituent".

If extracting directly from SQL, SELECT * FROM RECORDS.

Note that you can extract only constituents by adding WHERE IS_CONSTITUENT = -1. For a Civi migration, I recommend importing all contacts.

Tab 1. General:

- Include all records.

- Head of Household processing: Export both constituents separately.

- Check all of the "Include these Constitutents": Inactive, deceased, no valid address

Tab 2: Output.

First, expand the "Constituent Information" in the left pane, and add every field to the export. Do the export (as a CSV).

In RE: Found at the bottom of the "Bio 2" tab.

In SQL: CONSTITUENT_CODES maps to "GroupContact". TABLEENTRIES stores the codes ("groups"). In my case, SELECT * FROM [CCR_July_snapshot].[dbo].[TABLEENTRIES] WHERE [CODETABLESID] = 43 did the trick. YMMV - see "deciphering stored procedures" below.

Export as one to many, below.

These map to "groups" in Civi - can also be mapped to "tags" if you don't need to track the begin/end date on them.

No need to export these fields:

System Record ID

Import ID

As of Civi 4.4.6, there's no way to import Group Begin/End dates via API, you need to do it via direct SQL.

These can map to groups - but also may map to privacy preferences or custom fields (e.g. Email Only, Do Not Solicit)

SQL to extract solicit codes:

SELECT RECORDSID AS external_identifier, LONGDESCRIPTION as solicit_code FROM CONSTITUENT_SOLICITCODES JOIN TABLEENTRIES ON SOLICIT_CODE = TABLEENTRIES.TABLEENTRIESID WHERE TABLEENTRIES.ACTIVE = -1

In my copy of RE, the CODETABLESID is 5044, so to get a list of all solicit codes, use:

SELECT LONGDESCRIPTION, ACTIVE FROM TABLEENTRIES WHERE CODETABLESID = 5044 ORDER BY SEQUENCE;

SQL tables: ADDRESS, CONSTIT_ADDRESS

Addresses are a many-to-many relationship in RE.

Not all addresses in the database are visible in RE. Addresses where the INDICATOR field is 1 or 7, for instance. Make sure to look your data over and filter those out accordingly.

RE is a child of the 90's, so a) phones are tied to addresses, not contacts, and b) e-mails and websites are a type of phone.

Notes:This SQL gets me a useful list of phones and e-mail for further processing in Kettle:

SELECT DISTINCT CONSTITADDRESSID , CONSTIT_ID , PHONETYPEID , CONSTIT_ADDRESS_PHONES."SEQUENCE" , NUM , DO_NOT_CALL , TEXT_MSG FROM CONSTIT_ADDRESS_PHONES LEFT JOIN PHONES ON CONSTIT_ADDRESS_PHONES.PHONESID = PHONES.PHONESID LEFT JOIN CONSTIT_ADDRESS ON CONSTITADDRESSID = CONSTIT_ADDRESS.ID

Relevant SQL table: CONSTIT_RELATIONSHIPS

Relationships are different in Civi and RE in the following significant ways:name_b_a field in civicrm_relationship_type.name_a_b and name_b_a in civicrm_relationship_type.Solicitor relationships are stored in a different table. I used this SQL to extract them:

SELECT CONSTIT_ID , SOLICITOR_ID , TABLEENTRIES.LONGDESCRIPTION as solicitor_type , AMOUNT , NOTES , cs."SEQUENCE" as weight FROM CONSTIT_SOLICITORS cs LEFT JOIN TABLEENTRIES ON cs.SOLICITOR_TYPE = TABLEENTRIES.TABLEENTRIESID ORDER BY weight

Attributes are the RE equivalent of custom fields. However, unlike custom fields, they can also have a "date" value and a "comments" value. While this can be replicated in Civi via multi-record custom field groups, ideally the data is evaluated attribute by attribute.

Valuable information about the setup of the attributes is available in RE from Config > Attributes.

civicrm_custom_field is AttributeTypes.AttributeTypes.CODETABLESID gives a lookup for the RE "option group" that contains valid options for that attribute.ConstituentAttributes. Note that it's stored in a Key-Value Pair-style table - you'll need to do a bunch of SQL queries, or run a Kettle "Row Denormaliser" step to get this data in order.Here's my preliminary SQL to export attributes from RE:

SELECT ca.PARENTID as external_identifier , ca.ATTRIBUTETYPESID , at.DESCRIPTION as Category , TABLEENTRIES.LONGDESCRIPTION as Description , TEXT , NUM , DATETIME , CURRENCY , "BOOLEAN" , COMMENTS , ca.ATTRIBUTEDATE FROM ConstituentAttributes ca JOIN AttributeTypes at ON ca.ATTRIBUTETYPESID = at.ATTRIBUTETYPESID LEFT JOIN TABLEENTRIES ON ca.TABLEENTRIESID = TABLEENTRIES.TABLEENTRIESID

note: In the SQL above, "PARENTID" and not "ConstitID" is the correct foreign key to link this to the contact.

To get a list of option values out of RE for the attributes, use this SQL:

SELECT DESCRIPTION , at.CODETABLESID , LONGDESCRIPTION FROM TABLEENTRIES te LEFT JOIN AttributeTypes at ON te.CODETABLESID = at.CODETABLESID ORDER BY DESCRIPTION

Attributes can be multi-record custom fields by their nature, so you have to account for that. Here's some alpha-grade SQL for sussing out which fields have multi-record custom fields:

SELECT ATTRIBUTETYPESID, PARENTID, COUNT(LONGDESCRIPTION) FROM ConstituentAttributes ca JOIN TABLEENTRIES te ON ca.TABLEENTRIESID = te.TABLEENTRIESID GROUP BY PARENTID, ATTRIBUTETYPESID HAVING COUNT(LONGDESCRIPTION) > 1 ORDER BY ATTRIBUTETYPESID

note: In Civi 4.5+, you could conceivable use "EntityRef" functionality to facilitate chained selects of OptionValue lists. That would let you create a multi-record custom field group that would very closely map how Attributes work in RE - but you'd have all the disadvantages of multi-record custom fields.

RE stores contact salutations and addressee info in two places.

Primary salutations/addressess are stored on the RECORDS table. PRIMARY_ADDRESSEE_ID, PRIMARY_ADDRESSEE, and PRIMARY_ADDRESSEE_EDIT, PRIMARY_SALUTATION_ID, PRIMARY_SALUTATION, PRIMARY_SALUTATION_EDIT.

An unlimited number of non-primary salutations can be stored in the CONSTITUENT_SALUTATION table.

Salutation options values are stored in the SALUTATION table, in the format "CODE1, CODE2, CODE3, etc.". Each code refers to an id in the SALUTATION_FIELDS table, which contains tokens (e.g. "First Name", "Spouse Last Name") as well as common words like "And".

Note that PRIMARY_ADDRESSEE is more akin to addressee_display in Civi, in that it stores the calculated display ID. Also note that when PRIMARY_ADDRESSEE_EDIT is -1 (true), that's the equivalent of a custom addressee in Civi, and the value stored in PRIMARY_ADDRESSEE_ID must be ignored.

Contributions (in RE parlance: Gifts) are complicated beasts!

Here are some relevant database tables and their equivalent in Civi:

GIFT civicrm_contribution

GiftSplit civicrm_line_item

CAMPAIGN Roughly maps to Campaign. Your mapping may vary and/or include custom fields.

APPEAL Also roughly maps to Campaign (or Source). Your mapping may vary and/or include custom fields.

FUND Roughly maps to Financial Type, but you might choose to import as a custom field instead.

See "Campaigns, Appeals, Packages" below for more.

Finally, note that RE has a concept of an "Adjustment". If a gift has already been posted to the accounting software, you can't simply change the amount. You create an adjustment, which has the updated data, and the two gift records are linked via the AdjustmentId. This is also how pledge installments are written off (see "Pledges" below).

Note that gift type is hardcoded into a function called "TranslateGiftType) - so you may want to include that function in your SQL, e.g.:

SELECT gs.GiftId , g.CONSTIT_ID , gs.Amount , g.DTE as gift_date , FUND.DESCRIPTION as fund , CAMPAIGN.DESCRIPTION as campaign , APPEAL.DESCRIPTION as appeal , g.PAYMENT_TYPE , g.ACKNOWLEDGE_FLAG , g.CHECK_NUMBER , g.CHECK_DATE , g.BATCH_NUMBER , g.ANONYMOUS , gst.LONGDESCRIPTION as giftsubtype , g.TYPE , DBO.TranslateGiftType(g.TYPE) as type2 FROM GiftSplit gs LEFT JOIN FUND on gs.FundId = FUND.id LEFT JOIN APPEAL on gs.AppealId = APPEAL.id LEFT JOIN CAMPAIGN on gs.CampaignId = CAMPAIGN.id LEFT JOIN GIFT g on gs.GiftId = g.ID LEFT JOIN TABLEENTRIES gst on g.GIFTSUBTYPE = gst.TABLEENTRIESID

(See here: http://www.re-decoded.com/2013/07/payment-type-or-payment-method-id-reference/#more-714)

Payment Type is also hard-coded, it seems:

1 Cash

2 Personal Check

3 Business Check

4 Credit Card

5 Standing Order

6 Direct Debit

7 Voucher

8 Other

Stored in GIFTSOFTCREDIT. RE does NOT have the concept of a soft credit type - which is fine.

SELECT , GiftId , ConstitId , Amount , 'Soft Credit' as soft_credit_type FROM GiftSoftCredit

(Important! Gift solicitors are different from Contact Solicitors)

I imported these as soft credits, but a different TYPE of soft credit. Here's the SQL I used to get the data out of RE:

SELECT ParentId as gift_id , SolicitorId as soft_creditee_external_identifier , Amount , 'Solicitor' as soft_credit_type FROM GiftSolicitor

As of CiviCRM 4.5, In Honor/Memorial of is considered a form of soft credit. In RE, they're still separate, and are called Tributes. The structure is a little more complex - the table structure is Constituent <-> Tribute <-> Gift_Tribute <-> Gift. Civi is Contact <-> Soft Credit <-> Contribution.

Here is some preliminary SQL that pulls tribute data suitable for transformation and import to Civi as ContributionSoft entities. Note that CiviCRM doesn't have a concept of a "Description" but does have the concept of a PCP Note, so I'm importing the description there - in the future, I could see the argument for Civi exposing the PCP Note as a description.

<pre> SELECT </pre> gt.GIFT_ID , gt.TRIBUTE_TYPE , t.DESCRIPTION , t.RECORDS_ID as tributee_extenal_identifier , te.LONGDESCRIPTION as tribute_type FROM GIFT_TRIBUTE gt JOIN TRIBUTE t ON gt.TRIBUTE_ID = t.ID LEFT JOIN TABLEENTRIES te on gt.TRIBUTE_TYPE = te.TABLEENTRIESID

Here are the relevant tables and their equivalents in Civi:

GIFT

Installment

InstallmentPayment

GIFT is equivalent to civicrm_contribution AND to civicrm_pledge. Pledges and contributions are stored in the same table - so a pledge paid in six installments will have SEVEN records in the GIFT field. Many organizations will specify a pledge in the Gift Type field - you can also tell by the presence of the INSTALLMENT_FREQUENCY, NUMBER_OF_INSTALLMENTS, FrequencyDescription, REMIND_FLAG, NextTransactionDate and the Schedule* fields. Note that some of these might also be used for recurring contributions.

Installment and InstallmentPayment are, when combined, the equivalent of the civicrm_pledge_payment table. civicrm_pledge_payment has a field scheduled_amount and actual_amount. RE's model is somewhat superior in that it allows partial payments on a pledge installment.

Notes: When creating pledges in CiviCRM via API, the open pledge payments are simultaneously created. To import pledge payments from RE, you first want to delete the auto-generated pledge payments, then import your own pledge payments. Finally, when importing pledge payments, the status of the parent pledge isn't updated. So you'll probably want some SQL to indicate whether the pledges are pending, complete or canceled, depending on the status of the pledge payments. Finally, watch out for adjustments, which is how some or all pledge installments might be marked as "Written Off" in RE.

The INSTALLMENT_FREQUENCY list is hard-coded:| 1 | Annually |

| 2 | Every 6 Months |

| 3 | Every 3 Months |

| 4 | Every 2 Months |

| 5 | Every Month |

| 6 | Due Twice/Month |

| 9 | Irregular |

| 10 | Single Installment |

Some SQL:

/* Find all GIFT records with one or more associated Installment records. These are pledges OR recurring gifts. */ SELECT DISTINCT g.CONSTIT_ID , g.ID as GiftId , g.Amount , g.DTE as receive_date , FUND.DESCRIPTION as fund , FUND.FUND_ID , CAMPAIGN.DESCRIPTION as campaign , APPEAL.DESCRIPTION as appeal , g.PAYMENT_TYPE , g.ACKNOWLEDGEDATE , DBO.TranslateGiftType(g.TYPE) as type , g.REF as note ,DATE_1ST_PAY ,g.DATEADDED ,g.DATECHANGED ,INSTALLMENT_FREQUENCY ,NUMBER_OF_INSTALLMENTS ,POST_DATE ,POST_STATUS ,REMIND_FLAG ,Schedule_Month ,Schedule_DayOfMonth ,Schedule_MonthlyDayOfWeek ,Schedule_Spacing ,Schedule_MonthlyType ,Schedule_MonthlyOrdinal ,Schedule_WeeklyDayOfWeek ,Schedule_DayOfMonth2 ,Schedule_SMDayType1 ,Schedule_SMDayType2 ,NextTransactionDate ,Schedule_EndDate ,FrequencyDescription , r.CONSTITUENT_ID FROM Gift g LEFT JOIN GiftSplit gs on g.ID = gs.GiftId LEFT JOIN FUND on gs.FundId = FUND.id LEFT JOIN APPEAL on gs.AppealId = APPEAL.id LEFT JOIN CAMPAIGN on gs.CampaignId = CAMPAIGN.id LEFT JOIN RECORDS r ON g.CONSTIT_ID = r.ID JOIN Installment i ON g.ID = i.PledgeId

Find pledge payments:

/* Find all pledge installments, and their related payments if they exist. */ SELECT i.InstallmentId , i.PledgeId , i.AdjustmentId , i.Amount as scheduled_amount , i.Dte , ip.Amount as actual_amount , ip.PaymentId , g.CONSTIT_ID , g.RECEIPT_AMOUNT , g.DTE as receive_date , g.TYPE , DBO.TranslateGiftType(g.TYPE) as type FROM Installment i LEFT JOIN InstallmentPayment ip ON i.InstallmentId = ip.InstallmentId LEFT JOIN GIFT g ON ip.PaymentId = g.ID /* Adjustments are stored in here too - when an adjustment happens, the pledge ID of the original value is blanked */ WHERE i.PledgeId IS NOT NULL ORDER BY i.AdjustmentId /* Write-off Types: Covenant WriteOff, MG Write Off, Write Off */

RE's model for campaigns is hierarchical and more sophisticated than CiviCRM's. A campaign (e.g. "Capital fundraising FY2017") can consist of several appeals (e.g. "Capital fundraising FY 2017 Spring Mailing"). Appeals will generally correspond to a particular action, especially a postal mailing. Campaigns and Appeals can be linked in a many-to-many relationship, but this is rare. The 1-to-many is by far the most common approach. Finally, an appeal can consist of "packages", which is a segment of your appeal. For instance, a single mailing ("appeal") could go out to major donors, regular donors and non-donors. You might also A/B test envelopes vs. postcards in the same mailing. This would result in a total of six "packages" for a single appeal. RE can track goals for each separately, and easily report on "number solicited" by package vs. number of gifts received.

Actions fill the same purpose as Activities in CiviCRM, but are architected quite differently - in some ways better, some ways worse. I don't have as much concrete info here, but here's a decent start at extracting Actions data via SQL:

SELECT a.ADDED_BY , a.AUTO_REMIND , a.RECORDS_ID as external_identifier , cr.RELATION_ID as action_contact_id , a.DTE as activity_date_time , LETTER.LONGDESCRIPTION as letter , a.PRIORITY as priority_id , a.REMIND_VALUE , a.CATEGORY , a.Completed , a.COMPLETED_DATE , a.FUND_ID , a.FOLLOWUPTO_ID , a.TRACKACTION_ID , a.PhoneNumber as phone_number , a.Remind_Frequency , a.WORDDOCNAME , a.APPEAL_ID , a.APPEAL_LETTER_CODE , a.OUTLOOK_EMAIL_SUBJECT , STATUS.LONGDESCRIPTION as status , TYPE.LONGDESCRIPTION as type , LOCATION.LONGDESCRIPTION as location , ActionNotepad.ActualNotes , CAMPAIGN.DESCRIPTION as campaign FROM ACTIONS a LEFT JOIN TABLEENTRIES as STATUS ON a.STATUS = STATUS.TABLEENTRIESID LEFT JOIN TABLEENTRIES as TYPE ON a.[TYPE] = [TYPE].TABLEENTRIESID LEFT JOIN TABLEENTRIES as LOCATION ON a.[Location] = LOCATION.TABLEENTRIESID LEFT JOIN TABLEENTRIES as LETTER on a.[LETTER_CODE] = LETTER.TABLEENTRIESID LEFT JOIN ActionNotepad ON a.ID = ActionNotepad.ParentId LEFT JOIN CAMPAIGN on a.CAMPAIGN_ID = CAMPAIGN.id LEFT JOIN CONSTIT_RELATIONSHIPS cr on a.CONTACT_ID = cr.ID

"Category" and "Action type" both roughly map to "Activity Type". Same for "status" and "COMPLETED" and "COMPLETED_DATE" mapping to "activity_status". RE lets you designate a related Campaign, Fund and Proposal; out of the box, Civi only supports Campaign. The auto-reminder is more flexible than you can get with scheduled reminders in Civi without getting very complicated. "Solicitors" can't be mapped to a contact reference lookup, because more than one can be stored.

Note: The SQL above presumes only one note per action. If you have multiple notes per action, the action will be represented with multiple records, one per associated note. I'll try to provide SQL for extracting the notes separately at a later date.

Action Notes are stored in their own table. This maps to "Details" on a Civi activity, but you can log multiple notes per action in RE. Here's the SQL I used to extract them in preparation:

SELECT NotesID , Title , Description , Author , ActualNotes , ParentId , NotepadDate , TABLEENTRIES.LONGDESCRIPTION as Type FROM ActionNotepad LEFT JOIN TABLEENTRIES ON ActionNotepad.NoteTypeId = TABLEENTRIES.TABLEENTRIESID ORDER BY ParentId, ActionNotepad."SEQUENCE"

Here's some SQL to pull in the most relevant data:

SELECT se.CAPACITY , se.END_DATE , se.ID , se.NAME , se.START_DATE , se.DATE_ADDED , te.LONGDESCRIPTION as activity_type , se.INACTIVE , se.DISPLAYONCALENDAR , CAMPAIGN.DESCRIPTION as campaign , se.DESCRIPTION FROM SPECIAL_EVENT se LEFT JOIN CAMPAIGN on se.CAMPAIGN_ID = CAMPAIGN.id LEFT JOIN TABLEENTRIES te ON se.TYPEID = te.TABLEENTRIESID

RE notes (stored in the "ConstituentNotepad" table) can store quite a bit of data that Civi notes can not. They can store formatting (but with proprietary format tags, not HTML), inline photos, etc, and contain fields for date of note (separate from "Date Added" and "Date Changed"), the type of note, etc. Fortunately, they store plain-text versions of formatted notes in their own field. "Notes" is formatted; "ActualNotes" is plain text (except, well, where it isn't).

I've resolved this by removing notes over a certain length (above 15K and I assume you're a photo) and concatenating the fields I want to keep (e.g. Note Type and Description) with the ActualNotes field.

It may be possible to export the photos in the Notes using the techniques described below under "Media".

Here's the SQL I'm currently using to extract notes before doing transforms in Kettle:

SELECT Title as subject , Description , Author , ActualNotes , ParentId , cn.DateChanged , LONGDESCRIPTION as NoteType FROM ConstituentNotepad cn LEFT JOIN TABLEENTRIES ON NoteTypeId = TABLEENTRIESID

The files stored on the "Media" tab are held in the [dbo].[MEDIA] table in MS SQL. Assuming embedded and not linked data, the files are stored in the MS Access OLE format. It's relatively difficult to extract data from the OLE wrapper, though searching for extract access ole on any search engine will give you lots of options in a variety of languages. Blackbaud even has code to do it here, if you feel like using VBA.

I opted to use a commercial software package from Yohz Software called SQL Image Viewer. If you use this tool, enter the command:

SELECT OBJECT FROM [dbo].[MEDIA]

Then press "Execute Query", then press "Export" when it's done. This exports about 200 items/minute on a computer with a very slow hard drive.

If you want to decode the OLE containers on your own, there's the bcp CLI tool that installs with MS SQL, and you can run a SQL query from SQL Server Management Studio that extracts your OLE containers; see here. I experimented with this strategy, and this CLI command extracted an Access OLE container:

bcp "SELECT OBJECT FROM [July_Snapshot].[dbo].[media] WHERE ID = 1210 " queryout "C:\Users\Jon\Desktop\temp\test.ole" -T -N -S HOSTNAME\RE_EXPRESS

The approach I took was to copy all the files into the "custom files" directory as specified in Administer > System Settings > Directories. Then I used the Attachment entity of the API to import the file to an activity. For the Media tab, I created activities especially to import the media onto.

Here's an example of the correct usage of the API to add in image "drill.jpg" to an activity with an ID of 628:

$result = civicrm_api3('Attachment', 'create', array(

'sequential' => 1,

'name' => "drill.jpg",

'mime_type' => "image/jpeg",

'entity_id' => 628,

'entity_table' => "civicrm_activity",

'options' => array('move-file' => "/home/jon/local/civicrm-buildkit/build/d46/sites/default/files/civicrm/custom/drill.jpg"),

));

Note that Civi will rename your files with random characters at the end, so this action is not idempotent. Keep a reserve copy of your exported RE media to roll back to!

If you use the API CSV import tool, your CSVs should look like this:

"name",entity_id,"entity_table","mime_type","options.move-file" "100.png",87511,"civicrm_activity","image/png","/home/jon/local/lccr/wp-content/plugins/files/civicrm/custom/100.png" "1000.pdf",88411,"civicrm_activity","application/pdf","/home/jon/local/lccr/wp-content/plugins/files/civicrm/custom/1000.pdf"

Open each CSV file in Excel or similar. Sort each field by ascending AND descending to see if any data is stored in that field. If every record has no data or the same data, delete it - it's not being tracked in the current system. If you see only one or two records with a particular field, they're also probably fine to go, but check with the client first.

Next, strip out all of the constituent information except for primary/foreign keys. I like to keep in First/Middle/Last name just for human readability though. So leave in those three fields, plus any field with the word "ID" in it. This is your base constituent info, and will be in every other export you do.

Now comes the fun part! Export each table, one at a time, by adding those fields to an export that already includes the base constituent info.

For one-to-many relationships, the system will ask you how many instances of the information to export. I default to 12, then look over the data to see how many are actually used, then re-export with a higher or lower number.

I also remove records that don't contain the relevant data. For instance, when exporting Solicit Codes, I sort by the first Solicit Code. Then I scroll down past the folks that have Solicit Codes to those who have none, and delete the rows for folks who have none.

Note that for simplicity's sake, RE contains many views of the tables that, if you export them all, you'll have redundant data. There's no need to export "First Gift", "Last Gift", or "Largest Gift" - simply export all gifts. Likewise for "Preferred Address".

When exporting one-to-many tables that themselves contain one-to-many tables (e.g. Addresses contains Phones), do NOT select 12 of each! That means you're exporting 144 phone numbers per record. First determine the maximum number of addresses being tracked, re-export with that number, THEN export with phone numbers. Also, it's reasonable to export with 5 phone numbers per address.

NOTE: Letters sent is incomplete, there's more than 12 letters to some folks!

GIFTS is related to constituent on the last column (Constituent System Record ID)

If you're extracting data from the SQL back-end, you'll see that the RE equivalent to Civi option groups is "code tables". There's two functions that handle lookups: dbo.GetTableEntryDescription and dbo.GetTableEntryDescSlim. To determine where the data is being accessed by the function, see "Deciphering MS SQL", below. Use the "lTableNumber" passed to those functions and you'll find your data in dbo.CODETABLES (comparable to civicrm_option_group), dbo.CODETABLEMAP and dbo.TABLEENTRIES (comparable to civicrm_option_value).